Creating and Scoring Essay Tests

FatCamera / Getty Images

- Tips & Strategies

- An Introduction to Teaching

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Teaching Resources

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida

- B.A., History, University of Florida

Essay tests are useful for teachers when they want students to select, organize, analyze, synthesize, and/or evaluate information. In other words, they rely on the upper levels of Bloom's Taxonomy . There are two types of essay questions: restricted and extended response.

- Restricted Response - These essay questions limit what the student will discuss in the essay based on the wording of the question. For example, "State the main differences between John Adams' and Thomas Jefferson's beliefs about federalism," is a restricted response. What the student is to write about has been expressed to them within the question.

- Extended Response - These allow students to select what they wish to include in order to answer the question. For example, "In Of Mice and Men , was George's killing of Lennie justified? Explain your answer." The student is given the overall topic, but they are free to use their own judgment and integrate outside information to help support their opinion.

Student Skills Required for Essay Tests

Before expecting students to perform well on either type of essay question, we must make sure that they have the required skills to excel. Following are four skills that students should have learned and practiced before taking essay exams:

- The ability to select appropriate material from the information learned in order to best answer the question.

- The ability to organize that material in an effective manner.

- The ability to show how ideas relate and interact in a specific context.

- The ability to write effectively in both sentences and paragraphs.

Constructing an Effective Essay Question

Following are a few tips to help in the construction of effective essay questions:

- Begin with the lesson objectives in mind. Make sure to know what you wish the student to show by answering the essay question.

- Decide if your goal requires a restricted or extended response. In general, if you wish to see if the student can synthesize and organize the information that they learned, then restricted response is the way to go. However, if you wish them to judge or evaluate something using the information taught during class, then you will want to use the extended response.

- If you are including more than one essay, be cognizant of time constraints. You do not want to punish students because they ran out of time on the test.

- Write the question in a novel or interesting manner to help motivate the student.

- State the number of points that the essay is worth. You can also provide them with a time guideline to help them as they work through the exam.

- If your essay item is part of a larger objective test, make sure that it is the last item on the exam.

Scoring the Essay Item

One of the downfalls of essay tests is that they lack in reliability. Even when teachers grade essays with a well-constructed rubric, subjective decisions are made. Therefore, it is important to try and be as reliable as possible when scoring your essay items. Here are a few tips to help improve reliability in grading:

- Determine whether you will use a holistic or analytic scoring system before you write your rubric . With the holistic grading system, you evaluate the answer as a whole, rating papers against each other. With the analytic system, you list specific pieces of information and award points for their inclusion.

- Prepare the essay rubric in advance. Determine what you are looking for and how many points you will be assigning for each aspect of the question.

- Avoid looking at names. Some teachers have students put numbers on their essays to try and help with this.

- Score one item at a time. This helps ensure that you use the same thinking and standards for all students.

- Avoid interruptions when scoring a specific question. Again, consistency will be increased if you grade the same item on all the papers in one sitting.

- If an important decision like an award or scholarship is based on the score for the essay, obtain two or more independent readers.

- Beware of negative influences that can affect essay scoring. These include handwriting and writing style bias, the length of the response, and the inclusion of irrelevant material.

- Review papers that are on the borderline a second time before assigning a final grade.

- Study for an Essay Test

- How to Create a Rubric in 6 Steps

- 10 Common Test Mistakes

- Self Assessment and Writing a Graduate Admissions Essay

- UC Personal Statement Prompt #1

- Holistic Grading (Composition)

- Ideal College Application Essay Length

- How To Study for Biology Exams

- Tips to Cut Writing Assignment Grading Time

- T.E.S.T. Season for Grades 7-12

- What You Need to Know About the Executive Assessment

- Best Practices for Subjective Test Questions

- 2019–2020 SAT Score Release Dates

- Top 10 GRE Test Tips

- What Would You Do Differently? Interview Question Tips

- A Simple Guide to Grading Elementary Students

Structure and Scoring of the Assessment

The structure of the assessment.

You'll begin by reading a prose passage of 700-1,000 words. This passage will be about as difficult as the readings in first-year courses at UC Berkeley. You'll have up to two hours to read the passage carefully and write an essay in response to a single topic and related questions based on the passage's content. These questions will generally ask you to read thoughtfully and to provide reasoned, concrete, and developed presentations of a specific point of view. Your essay will be evaluated on the basis of your ability to develop your central idea, to express yourself clearly, and to use the conventions of written English.

Five Qualities of a Well-Written Essay

There is no "correct" response for the topic, but there are some things readers will look for in a strong, well-written essay.

- The writer demonstrates that they understood the passage.

- The writer maintains focus on the task assigned.

- The writer leads readers to understand a point of view, if not to accept it.

- The writer develops a central idea and provides specific examples.

- The writer evaluates the reading passage in light of personal experience, observations, or by testing the author's assumptions against their own.

Scoring is typically completed within three weeks after the assessment date. The readers are UC Berkeley faculty members, primarily from College Writing Programs, though faculty from other related departments, such as English or Comparative Literature might participate as well.

Your essay will be scored independently by two readers, who will not know your identity. They will measure your essay against a scoring guide. If the two readers have different opinions, then a third reader will assess your essay as well to help reach a final decision. Each reader will give your essay a score on a scale of 1 (lowest) to 6 (highest). When your two scores are added together, if they are 8 or higher, you satisfy the Entry Level Writing Requirement and may take any 4-unit "R_A" course (first half of the requirement, usually numbered R1A, though sometimes with a different number). If you receive a score less than 8, you should sign up for College Writing R1A, which satisfies both the Entry Level Writing Requirement and the first-semester ("A" part) of the Reading and Composition Requirement.

The Scoring Guide

The Scoring Guide outlines the characteristics typical of essays at six different levels of competence. Readers assign each essay a score according to its main qualities. Readers take into account the fact that the responses are written with two hours of reading and writing, without a longer period of time for drafting and revision.

An essay with a score of 6 may

- command attention because of its insightful development and mature style.

- present a cogent response to the text, elaborating that response with well-chosen examples and persuasive reasoning.

- present an organization that reinforces the development of the ideas which are aptly detailed.

- show that its writer can usually choose words well, use sophisticated sentences effectively, and observe the conventions of written English.

An essay with a score of 5 may

clearly demonstrate competent writing skill.

present a thoughtful response to the text, elaborating that response with appropriate examples and sensible reasoning.

present an organization that supports the writer’s ideas, which are developed with greater detail than is typical of an essay scored '4.'

have a less fluent and complex style than an essay scored '6,' but shows that the writer can usually choose words accurately, vary sentences effectively, and observe the conventions of written English.

An essay with a score of 4 may

be just 'satisfactory.'

present an adequate response to the text, elaborating that response with sufficient examples and acceptable reasoning.

demonstrate an organization that generally supports the writer’s ideas, which are developed with sufficient detail.

use examples and reasoning that are less developed than those in '5' essays.

show that its writer can usually choose words of sufficient precision, control sentences of reasonable variety, and observe the conventions of written English.

An essay with a score of 3 may

be unsatisfactory in one or more of the following ways:

It may respond to the text illogically

it may reflect an incomplete understanding of the text or the topic

it may provide insufficient reasoning or lack elaboration with examples, or the examples provided may not be sufficiently detailed to support claims

it may be inadequately organized

have prose characterized by at least one of the following:

frequently imprecise word choice

little sentence variety

occasional major errors in grammar and usage, or frequent minor errors

An essay with a score of 2 may

show weaknesses, ordinarily of several kinds.

present a simplistic or inappropriate response to the text, one that may suggest some significant misunderstanding of the text or the topic

use organizational strategies that detract from coherence or provide inappropriate or irrelevant detail.

simplistic or inaccurate word choice

monotonous or fragmented sentence structure

many repeated errors in grammar and usage

An essay with a score of 1 may

show serious weaknesses.

disregard the topic's demands, or it may lack structure or development.

Have an organization that fails to support the essay’s ideas.

be inappropriately brief.

have a pattern of errors in word choice, sentence structure, grammar, and usage.

Rubric Best Practices, Examples, and Templates

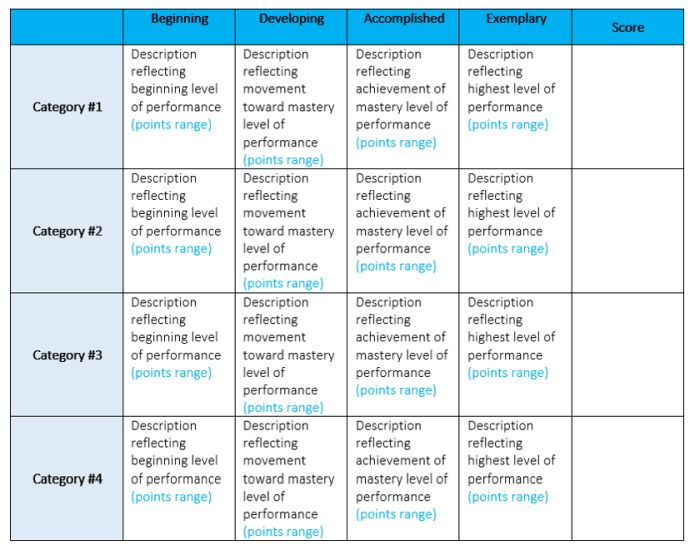

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

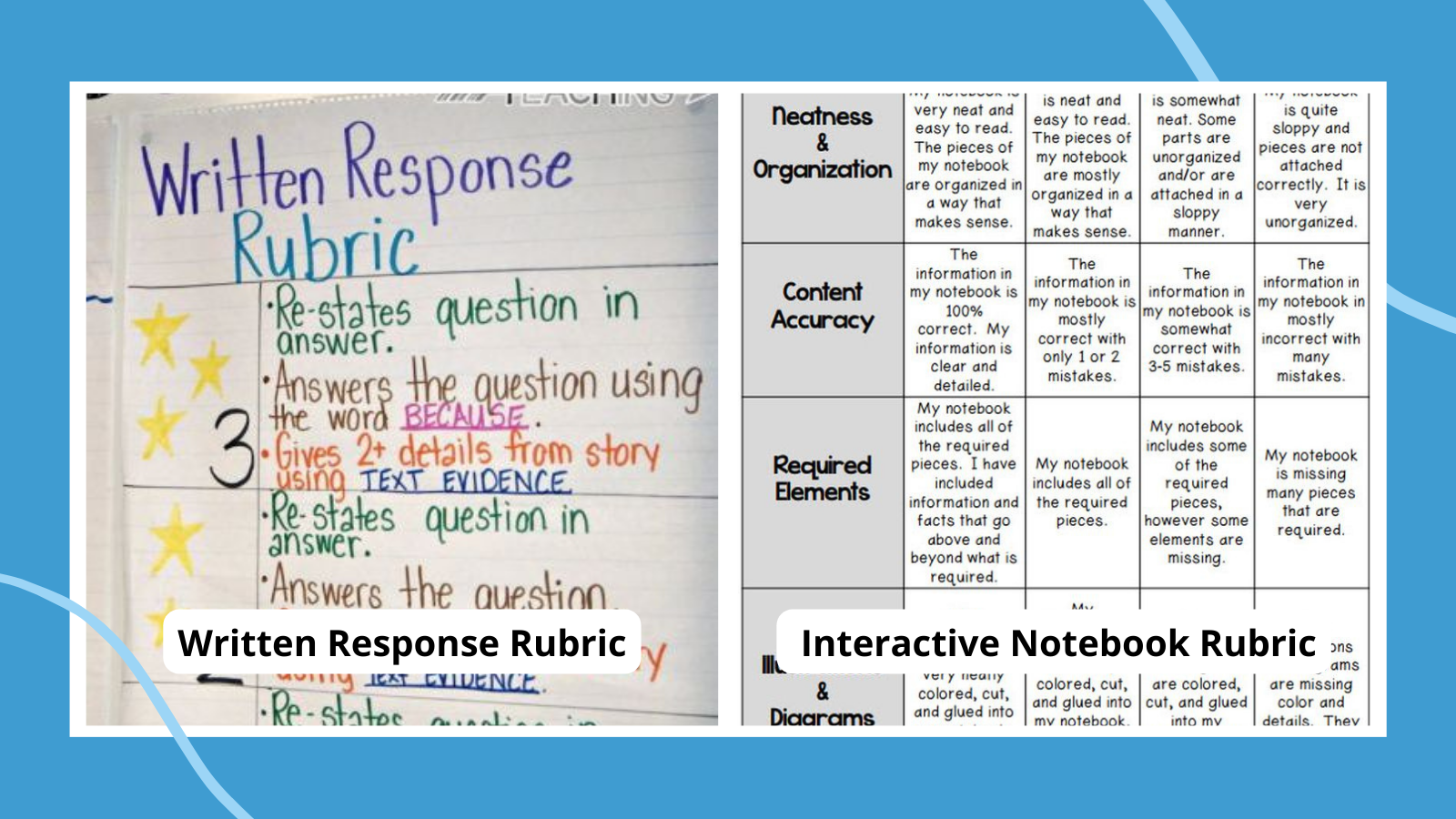

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

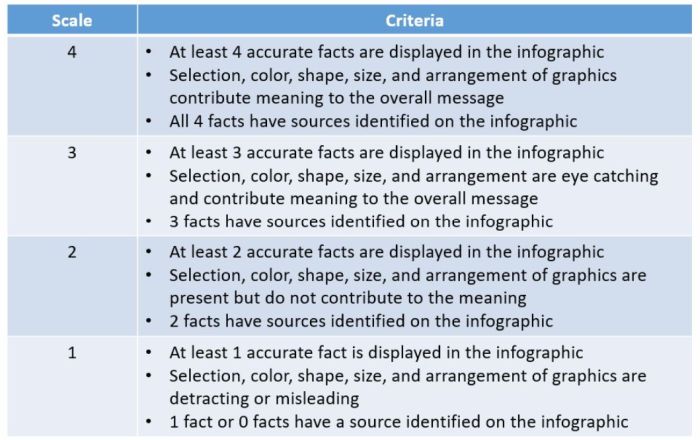

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

- CRLT Consultation Services

- Teaching Consultation

- Midterm Student Feedback

- Classroom Observation

- Teaching Philosophy

- Upcoming Events and Seminars

- CRLT Calendar

- Orientations

- Teaching Academies

- Provost's Seminars

- Past Events

- For Faculty

- For Grad Students & Postdocs

- For Chairs, Deans & Directors

- Customized Workshops & Retreats

- CRLT in Engineering

- CRLT Players

- Foundational Course Initiative

- CRLT Grants

- Other U-M Grants

- Provost's Teaching Innovation Prize

- U-M Teaching Awards

- Retired Grants

- Staff Directory

- Faculty Advisory Board

- Annual Report

- Equity-Focused Teaching

- Preparing to Teach

- Teaching Strategies

- Testing and Grading

- Teaching with Technology

- Teaching Philosophy & Statements

- Training GSIs

- Evaluation of Teaching

- Occasional Papers

Best Practices for Designing and Grading Exams

Adapted from crlt occasional paper #24: m.e. piontek (2008), center for research on learning and teaching.

The most obvious function of assessment methods (such as exams, quizzes, papers, and presentations) is to enable instructors to make judgments about the quality of student learning (i.e., assign grades). However, the method of assessment also can have a direct impact on the quality of student learning. Students assume that the focus of exams and assignments reflects the educational goals most valued by an instructor, and they direct their learning and studying accordingly (McKeachie & Svinicki, 2006). General grading systems can have an impact as well. For example, a strict bell curve (i.e., norm-reference grading) has the potential to dampen motivation and cooperation in a classroom, while a system that strictly rewards proficiency (i.e., criterion-referenced grading ) could be perceived as contributing to grade inflation. Given the importance of assessment for both faculty and student interactions about learning, how can instructors develop exams that provide useful and relevant data about their students' learning and also direct students to spend their time on the important aspects of a course or course unit? How do grading practices further influence this process?

Guidelines for Designing Valid and Reliable Exams

Ideally, effective exams have four characteristics. They are:

- Valid, (providing useful information about the concepts they were designed to test),

- Reliable (allowing consistent measurement and discriminating between different levels of performance),

- Recognizable (instruction has prepared students for the assessment), and

- Realistic (concerning time and effort required to complete the assignment) (Svinicki, 1999).

Most importantly, exams and assignments should f ocus on the most important content and behaviors emphasized during the course (or particular section of the course). What are the primary ideas, issues, and skills you hope students learn during a particular course/unit/module? These are the learning outcomes you wish to measure. For example, if your learning outcome involves memorization, then you should assess for memorization or classification; if you hope students will develop problem-solving capacities, your exams should focus on assessing students’ application and analysis skills. As a general rule, assessments that focus too heavily on details (e.g., isolated facts, figures, etc.) “will probably lead to better student retention of the footnotes at the cost of the main points" (Halpern & Hakel, 2003, p. 40). As noted in Table 1, each type of exam item may be better suited to measuring some learning outcomes than others, and each has its advantages and disadvantages in terms of ease of design, implementation, and scoring.

Table 1: Advantages and Disadvantages of Commonly Used Types of Achievement Test Items

Adapted from Table 10.1 of Worthen, et al., 1993, p. 261.

General Guidelines for Developing Multiple-Choice and Essay Questions

The following sections highlight general guidelines for developing multiple-choice and essay questions, which are often used in college-level assessment because they readily lend themselves to measuring higher order thinking skills (e.g., application, justification, inference, analysis and evaluation). Yet instructors often struggle to create, implement, and score these types of questions (McMillan, 2001; Worthen, et al., 1993).

Multiple-choice questions have a number of advantages. First, they can measure various kinds of knowledge, including students' understanding of terminology, facts, principles, methods, and procedures, as well as their ability to apply, interpret, and justify. When carefully designed, multiple-choice items also can assess higher-order thinking skills.

Multiple-choice questions are less ambiguous than short-answer items, thereby providing a more focused assessment of student knowledge. Multiple-choice items are superior to true-false items in several ways: on true-false items, students can receive credit for knowing that a statement is incorrect, without knowing what is correct. Multiple-choice items offer greater reliability than true-false items as the opportunity for guessing is reduced with the larger number of options. Finally, an instructor can diagnose misunderstanding by analyzing the incorrect options chosen by students.

A disadvantage of multiple-choice items is that they require developing incorrect, yet plausible, options that can be difficult to create. In addition, multiple- choice questions do not allow instructors to measure students’ ability to organize and present ideas. Finally, because it is much easier to create multiple-choice items that test recall and recognition rather than higher order thinking, multiple-choice exams run the risk of not assessing the deep learning that many instructors consider important (Greenland & Linn, 1990; McMillan, 2001).

Guidelines for writing multiple-choice items include advice about stems, correct answers, and distractors (McMillan, 2001, p. 150; Piontek, 2008):

- S tems pose the problem or question.

- Is the stem stated as clearly, directly, and simply as possible?

- Is the problem described fully in the stem?

- Is the stem stated positively, to avoid the possibility that students will overlook terms like “no,” “not,” or “least”?

- Does the stem provide only information relevant to the problem?

Possible responses include the correct answer and distractors , or the incorrect choices. Multiple-choice questions usually have at least three distractors.

- Are the distractors plausible to students who do not know the correct answer?

- Is there only one correct answer?

- Are all the possible answers parallel with respect to grammatical structure, length, and complexity?

- Are the options short?

- Are complex options avoided? Are options placed in logical order?

- Are correct answers spread equally among all the choices? (For example, is answer “A” correct about the same number of times as options “B” or “C” or “D”)?

An example of good multiple-choice questions that assess higher-order thinking skills is the following test question from pharmacy (Park, 2008):

Patient WC was admitted for third-degree burns over 75% of his body. The attending physician asks you to start this patient on antibiotic therapy. Which one of the following is the best reason why WC would need antibiotic prophylaxis? a. His burn injuries have broken down the innate immunity that prevents microbial invasion. b. His injuries have inhibited his cellular immunity. c. His injuries have impaired antibody production. d. His injuries have induced the bone marrow, thus activated immune system

A second question builds on the first by describing the patient’s labs two days later, asking the students to develop an explanation for the subsequent lab results. (See Piontek, 2008 for the full question.)

Essay questions can tap complex thinking by requiring students to organize and integrate information, interpret information, construct arguments, give explanations, evaluate the merit of ideas, and carry out other types of reasoning (Cashin, 1987; Gronlund & Linn, 1990; McMillan, 2001; Thorndike, 1997; Worthen, et al., 1993). Restricted response essay questions are good for assessing basic knowledge and understanding and generally require a brief written response (e.g., “State two hypotheses about why birds migrate. Summarize the evidence supporting each hypothesis” [Worthen, et al., 1993, p. 277].) Extended response essay items allow students to construct a variety of strategies, processes, interpretations and explanations for a question, such as the following:

The framers of the Constitution strove to create an effective national government that balanced the tension between majority rule and the rights of minorities. What aspects of American politics favor majority rule? What aspects protect the rights of those not in the majority? Drawing upon material from your readings and the lectures, did the framers successfully balance this tension? Why or why not? (Shipan, 2008).

In addition to measuring complex thinking and reasoning, advantages of essays include the potential for motivating better study habits and providing the students flexibility in their responses. Instructors can evaluate how well students are able to communicate their reasoning with essay items, and they are usually less time consuming to construct than multiple-choice items that measure reasoning.

The major disadvantages of essays include the amount of time instructors must devote to reading and scoring student responses, and the importance of developing and using carefully constructed criteria/rubrics to insure reliability of scoring. Essays can assess only a limited amount of content in one testing period/exam due to the length of time required for students to respond to each essay item. As a result, essays do not provide a good sampling of content knowledge across a curriculum (Gronlund & Linn, 1990; McMillan, 2001).

Guidelines for writing essay questions include the following (Gronlund & Linn, 1990; McMillan, 2001; Worthen, et al., 1993):

- Restrict the use of essay questions to educational outcomes that are difficult to measure using other formats. For example, to test recall knowledge, true-false, fill-in-the-blank, or multiple-choice questions are better measures.

- Generalizations : State a set of principles that can explain the following events.

- Synthesis : Write a well-organized report that shows…

- Evaluation : Describe the strengths and weaknesses of…

- Write the question clearly so that students do not feel that they are guessing at “what the instructor wants me to do.”

- Indicate the amount of time and effort students should spend on each essay item.

- Avoid giving students options for which essay questions they should answer. This choice decreases the validity and reliability of the test because each student is essentially taking a different exam.

- Consider using several narrowly focused questions (rather than one broad question) that elicit different aspects of students’ skills and knowledge.

- Make sure there is enough time to answer the questions.

Guidelines for scoring essay questions include the following (Gronlund & Linn, 1990; McMillan, 2001; Wiggins, 1998; Worthen, et al., 1993; Writing and grading essay questions , 1990):

- Outline what constitutes an expected answer.

- Select an appropriate scoring method based on the criteria. A rubric is a scoring key that indicates the criteria for scoring and the amount of points to be assigned for each criterion. A sample rubric for a take-home history exam question might look like the following:

For other examples of rubrics, see CRLT Occasional Paper #24 (Piontek, 2008).

- Clarify the role of writing mechanics and other factors independent of the educational outcomes being measured. For example, how does grammar or use of scientific notation figure into your scoring criteria?

- Create anonymity for students’ responses while scoring and create a random order in which tests are graded (e.g., shuffle the pile) to increase accuracy of the scoring.

- Use a systematic process for scoring each essay item. Assessment guidelines suggest scoring all answers for an individual essay question in one continuous process, rather than scoring all answers to all questions for an individual student. This system makes it easier to remember the criteria for scoring each answer.

You can also use these guidelines for scoring essay items to create grading processes and rubrics for students’ papers, oral presentations, course projects, and websites. For other grading strategies, see Responding to Student Writing – Principles & Practices and Commenting Effectively on Student Writing .

Cashin, W. E. (1987). Improving essay tests . Idea Paper, No. 17. Manhattan, KS: Center for Faculty Evaluation and Development, Kansas State University.

Gronlund, N. E., & Linn, R. L. (1990). Measurement and evaluation in teaching (6th ed.). New York: Macmillan Publishing Company.

Halpern, D. H., & Hakel, M. D. (2003). Applying the science of learning to the university and beyond. Change, 35 (4), 37-41.

McKeachie, W. J., & Svinicki, M. D. (2006). Assessing, testing, and evaluating: Grading is not the most important function. In McKeachie's Teaching tips: Strategies, research, and theory for college and university teachers (12th ed., pp. 74-86). Boston: Houghton Mifflin Company.

McMillan, J. H. (2001). Classroom assessment: Principles and practice for effective instruction. Boston: Allyn and Bacon.

Park, J. (2008, February 4). Personal communication. University of Michigan College of Pharmacy.

Piontek, M. (2008). Best practices for designing and grading exams. CRLT Occasional Paper No. 24 . Ann Arbor, MI. Center for Research on Learning and Teaching.>

Shipan, C. (2008, February 4). Personal communication. University of Michigan Department of Political Science.

Svinicki, M. D. (1999a). Evaluating and grading students. In Teachers and students: A sourcebook for UT- Austin faculty (pp. 1-14). Austin, TX: Center for Teaching Effectiveness, University of Texas at Austin.

Thorndike, R. M. (1997). Measurement and evaluation in psychology and education. Upper Saddle River, NJ: Prentice-Hall, Inc.

Wiggins, G. P. (1998). Educative assessment: Designing assessments to inform and improve student performance . San Francisco: Jossey-Bass Publishers.

Worthen, B. R., Borg, W. R., & White, K. R. (1993). Measurement and evaluation in the schools . New York: Longman.

Writing and grading essay questions. (1990, September). For Your Consideration , No. 7. Chapel Hill, NC: Center for Teaching and Learning, University of North Carolina at Chapel Hill.

back to top

Contact CRLT

location_on University of Michigan 1071 Palmer Commons 100 Washtenaw Ave. Ann Arbor, MI 48109-2218

phone Phone: (734) 764-0505

description Fax: (734) 647-3600

email Email: [email protected]

Connect with CRLT

directions Directions to CRLT

group Staff Directory

markunread_mailbox Subscribe to our Blog

Choose Your Test

Sat / act prep online guides and tips, sat essay rubric: full analysis and writing strategies.

We're about to dive deep into the details of that least beloved* of SAT sections, the SAT essay . Prepare for a discussion of the SAT essay rubric and how the SAT essay is graded based on that. I'll break down what each item on the rubric means and what you need to do to meet those requirements.

On the SAT, the last section you'll encounter is the (optional) essay. You have 50 minutes to read a passage, analyze the author's argument, and write an essay. If you don’t write on the assignment, plagiarize, or don't use your own original work, you'll get a 0 on your essay. Otherwise, your essay scoring is done by two graders - each one grades you on a scale of 1-4 in Reading, Analysis, and Writing, for a total essay score out of 8 in each of those three areas . But how do these graders assign your writing a numerical grade? By using an essay scoring guide, or rubric.

*may not actually be the least belovèd.

Feature image credit: Day 148: the end of time by Bruce Guenter , used under CC BY 2.0 /Cropped from original.

UPDATE: SAT Essay No Longer Offered

(adsbygoogle = window.adsbygoogle || []).push({});.

In January 2021, the College Board announced that after June 2021, it would no longer offer the Essay portion of the SAT (except at schools who opt in during School Day Testing). It is now no longer possible to take the SAT Essay, unless your school is one of the small number who choose to offer it during SAT School Day Testing.

While most colleges had already made SAT Essay scores optional, this move by the College Board means no colleges now require the SAT Essay. It will also likely lead to additional college application changes such not looking at essay scores at all for the SAT or ACT, as well as potentially requiring additional writing samples for placement.

What does the end of the SAT Essay mean for your college applications? Check out our article on the College Board's SAT Essay decision for everything you need to know.

The Complete SAT Essay Grading Rubric: Item-by-Item Breakdown

Based on the CollegeBoard’s stated Reading, Analysis, and Writing criteria, I've created the below charts (for easier comparison across score points). For the purpose of going deeper into just what the SAT is looking for in your essay, I've then broken down each category further (with examples).

The information in all three charts is taken from the College Board site .

The biggest change to the SAT essay (and the thing that really distinguishes it from the ACT essay) is that you are required to read and analyze a text , then write about your analysis of the author's argument in your essay. Your "Reading" grade on the SAT essay reflects how well you were able to demonstrate your understanding of the text and the author's argument in your essay.

You'll need to show your understanding of the text on two different levels: the surface level of getting your facts right and the deeper level of getting the relationship of the details and the central ideas right.

Surface Level: Factual Accuracy

One of the most important ways you can show you've actually read the passage is making sure you stick to what is said in the text . If you’re writing about things the author didn’t say, or things that contradict other things the author said, your argument will be fundamentally flawed.

For instance, take this quotation from a (made-up) passage about why a hot dog is not a sandwich:

“The fact that you can’t, or wouldn’t, cut a hot dog in half and eat it that way, proves that a hot dog is once and for all NOT a sandwich”

Here's an example of a factually inaccurate paraphrasing of this quotation:

The author builds his argument by discussing how, since hot-dogs are often served cut in half, this makes them different from sandwiches.

The paraphrase contradicts the passage, and so would negatively affect your reading score. Now let's look at an accurate paraphrasing of the quotation:

The author builds his argument by discussing how, since hot-dogs are never served cut in half, they are therefore different from sandwiches.

It's also important to be faithful to the text when you're using direct quotations from the passage. Misquoting or badly paraphrasing the author’s words weakens your essay, because the evidence you’re using to support your points is faulty.

Higher Level: Understanding of Central Ideas

The next step beyond being factually accurate about the passage is showing that you understand the central ideas of the text and how details of the passage relate back to this central idea.

Why does this matter? In order to be able to explain why the author is persuasive, you need to be able to explain the structure of the argument. And you can’t deconstruct the author's argument if you don’t understand the central idea of the passage and how the details relate to it.

Here's an example of a statement about our fictional "hot dogs are sandwiches" passage that shows understanding of the central idea of the passage:

Hodgman’s third primary defense of why hot dogs are not sandwiches is that a hot dog is not a subset of any other type of food. He uses the analogy of asking the question “is cereal milk a broth, sauce, or gravy?” to show that making such a comparison between hot dogs and sandwiches is patently illogical.

The above statement takes one step beyond merely being factually accurate to explain the relation between different parts of the passage (in this case, the relation between the "what is cereal milk?" analogy and the hot dog/sandwich debate).

Of course, if you want to score well in all three essay areas, you’ll need to do more in your essay than merely summarizing the author’s argument. This leads directly into the next grading area of the SAT Essay.

The items covered under this criterion are the most important when it comes to writing a strong essay. You can use well-spelled vocabulary in sentences with varied structure all you want, but if you don't analyze the author's argument, demonstrate critical thinking, and support your position, you will not get a high Analysis score .

Because this category is so important, I've broken it down even further into its two different (but equally important) component parts to make sure everything is as clearly explained as possible.

Part I: Critical Thinking (Logic)

Critical thinking, also known as critical reasoning, also known as logic, is the skill that SAT essay graders are really looking to see displayed in the essay. You need to be able to evaluate and analyze the claim put forward in the prompt. This is where a lot of students may get tripped up, because they think “oh, well, if I can just write a lot, then I’ll do well.” While there is some truth to the assertion that longer essays tend to score higher , if you don’t display critical thinking you won’t be able to get a top score on your essay.

What do I mean by critical thinking? Let's take the previous prompt example:

Write an essay in which you explain how Hodgman builds an argument to persuade his audience that the hot dog cannot, and never should be, considered a sandwich.

An answer to this prompt that does not display critical thinking (and would fall into a 1 or 2 on the rubric) would be something like:

The author argues that hot dogs aren’t sandwiches, which is persuasive to the reader.

While this does evaluate the prompt (by providing a statement that the author's claim "is persuasive to the reader"), there is no corresponding analysis. An answer to this prompt that displays critical thinking (and would net a higher score on the rubric) could be something like this:

The author uses analogies to hammer home his point that hot dogs are not sandwiches. Because the readers will readily believe the first part of the analogy is true, they will be more likely to accept that the second part (that hot dogs aren't sandwiches) is true as well.

See the difference? Critical thinking involves reasoning your way through a situation (analysis) as well as making a judgement (evaluation) . On the SAT essay, however, you can’t just stop at abstract critical reasoning - analysis involves one more crucial step...

Part II: Examples, Reasons, and Other Evidence (Support)

The other piece of the puzzle (apparently this is a tiny puzzle) is making sure you are able to back up your point of view and critical thinking with concrete evidence . The SAT essay rubric says that the best (that is, 4-scoring) essay uses “ relevant, sufficient, and strategically chosen support for claim(s) or point(s) made. ” This means you can’t just stick to abstract reasoning like this:

That explanation is a good starting point, but if you don't back up your point of view with quoted or paraphrased information from the text to support your discussion of the way the author builds his/her argument, you will not be able to get above a 3 on the Analysis portion of the essay (and possibly the Reading portion as well, if you don't show you've read the passage). Let's take a look of an example of how you might support an interpretation of the author's effect on the reader using facts from the passage :

The author’s reference to the Biblical story about King Solomon elevates the debate about hot dogs from a petty squabble between friends to a life-or-death disagreement. The reader cannot help but see the parallels between the two situations and thus find themselves agreeing with the author on this point.

Does the author's reference to King Solomon actually "elevate the debate," causing the reader to agree with the author? From the sentences above, it certainly seems plausible that it might. While your facts do need to be correct, you get a little more leeway with your interpretations of how the author’s persuasive techniques might affect the audience. As long as you can make a convincing argument for the effect a technique the author uses might have on the reader, you’ll be good.

Say whaaat?! #tbt by tradlands , used under CC BY 2.0 /Cropped and color-adjusted from original.

Did I just blow your mind? Read more about the secrets the SAT doesn’t want you to know in this article .

Your Writing score on the SAT essay is not just a reflection of your grasp of the conventions of written English (although it is that as well). You'll also need to be focused, organized, and precise.

Because there's a lot of different factors that go into calculating your Writing score, I've divided the discussion of this rubric area into five separate items:

Precise Central Claim

Organization, vocab and word choice, sentence structure, grammar, etc..

One of the most basic rules of the SAT essay is that you need to express a clear opinion on the "assignment" (the prompt) . While in school (and everywhere else in life, pretty much) you’re encouraged to take into account all sides of a topic, it behooves you to NOT do this on the SAT essay. Why? Because you only have 50 minutes to read the passage, analyze the author's argument, and write the essay, there's no way you can discuss every single way in which the author builds his/her argument, every single detail of the passage, or a nuanced argument about what works and what doesn't work.

Instead, I recommend focusing your discussion on a few key ways the author is successful in persuading his/her audience of his/her claim.

Let’s go back to the assignment we've been using as an example throughout this article:

"Write an essay in which you explain how Hodgman builds an argument to persuade his audience that the hot dog cannot, and never should be, considered a sandwich."

Your instinct (trained from many years of schooling) might be to answer:

"There are a variety of ways in which the author builds his argument."

This is a nice, vague statement that leaves you a lot of wiggle room. If you disagree with the author, it's also a way of avoiding having to say that the author is persuasive. Don't fall into this trap! You do not necessarily have to agree with the author's claim in order to analyze how the author persuades his/her readers that the claim is true.

Here's an example of a precise central claim about the example assignment:

The author effectively builds his argument that hot dogs are not sandwiches by using logic, allusions to history and mythology, and factual evidence.

In contrast to the vague claim that "There are a variety of ways in which the author builds his argument," this thesis both specifies what the author's argument is and the ways in which he builds the argument (that you'll be discussing in the essay).

While it's extremely important to make sure your essay has a clear point of view, strong critical reasoning, and support for your position, that's not enough to get you a top score. You need to make sure that your essay "demonstrates a deliberate and highly effective progression of ideas both within paragraphs and throughout the essay."

What does this mean? Part of the way you can make sure your essay is "well organized" has to do with following standard essay construction points. Don't write your essay in one huge paragraph; instead, include an introduction (with your thesis stating your point of view), body paragraphs (one for each example, usually), and a conclusion. This structure might seem boring, but it really works to keep your essay organized, and the more clearly organized your essay is, the easier it will be for the essay grader to understand your critical reasoning.

The second part of this criteria has to do with keeping your essay focused, making sure it contains "a deliberate and highly effective progression of ideas." You can't just say "well, I have an introduction, body paragraphs, and a conclusion, so I guess my essay is organized" and expect to get a 4/4 on your essay. You need to make sure that each paragraph is also organized . Recall the sample prompt:

“Write an essay in which you explain how Hodgman builds an argument to persuade his audience that the hot dog cannot, and never should be, considered a sandwich.”

And our hypothetical thesis:

Let's say that you're writing the paragraph about the author's use of logic to persuade his reader that hot dogs aren't sandwiches. You should NOT just list ways that the author is logical in support of his claim, then explain why logic in general is an effective persuasive device. While your points might all be valid, your essay would be better served by connecting each instance of logic in the passage with an explanation of how that example of logic persuades the reader to agree with the author.

Above all, it is imperative that you make your thesis (your central claim) clear in the opening paragraph of your essay - this helps the grader keep track of your argument. There's no reason you’d want to make following your reasoning more difficult for the person grading your essay (unless you’re cranky and don’t want to do well on the essay. Listen, I don’t want to tell you how to live your life).

In your essay, you should use a wide array of vocabulary (and use it correctly). An essay that scores a 4 in Writing on the grading rubric “demonstrates a consistent use of precise word choice.”

You’re allowed a few errors, even on a 4-scoring essay, so you can sometimes get away with misusing a word or two. In general, though, it’s best to stick to using words you are certain you not only know the meaning of, but also know how to use. If you’ve been studying up on vocab, make sure you practice using the words you’ve learned in sentences, and have those sentences checked by someone who is good at writing (in English), before you use those words in an SAT essay.

Creating elegant, non-awkward sentences is the thing I struggle most with under time pressure. For instance, here’s my first try at the previous sentence: “Making sure a sentence structure makes sense is the thing that I have the most problems with when I’m writing in a short amount of time” (hahaha NOPE - way too convoluted and wordy, self). As another example, take a look at these two excerpts from the hypothetical essay discussing how the author persuaded his readers that a hot dog is not a sandwich:

Score of 2: "The author makes his point by critiquing the argument against him. The author pointed out the logical fallacy of saying a hot dog was a sandwich because it was meat "sandwiched" between two breads. The author thus persuades the reader his point makes sense to be agreed with and convinces them."

The above sentences lack variety in structure (they all begin with the words "the author"), and the last sentence has serious flaws in its structure (it makes no sense).

Score of 4: "The author's rigorous examination of his opponent's position invites the reader, too, to consider this issue seriously. By laying out his reasoning, step by step, Hodgman makes it easy for the reader to follow along with his train of thought and arrive at the same destination that he has. This destination is Hodgman's claim that a hot dog is not a sandwich."

The above sentences demonstrate variety in sentence structure (they don't all begin with the same word and don't have the same underlying structure) that presumably forward the point of the essay.

In general, if you're doing well in all the other Writing areas, your sentence structures will also naturally vary. If you're really worried that your sentences are not varied enough, however, my advice for working on "demonstrating meaningful variety in sentence structure" (without ending up with terribly worded sentences) is twofold:

- Read over what you’ve written before you hand it in and change any wordings that seem awkward, clunky, or just plain incorrect.

- As you’re doing practice essays, have a friend, family member, or teacher who is good at (English) writing look over your essays and point out any issues that arise.

This part of the Writing grade is all about the nitty gritty details of writing: grammar, punctuation, and spelling . It's rare that an essay with serious flaws in this area can score a 4/4 in Reading, Analysis, or Writing, because such persistent errors often "interfere with meaning" (that is, persistent errors make it difficult for the grader to understand what you're trying to get across).

On the other hand, if they occur in small quantities, grammar/punctuation/spelling errors are also the things that are most likely to be overlooked. If two essays are otherwise of equal quality, but one writer misspells "definitely" as "definately" and the other writer fails to explain how one of her examples supports her thesis, the first writer will receive a higher essay score. It's only when poor grammar, use of punctuation, and spelling start to make it difficult to understand your essay that the graders start penalizing you.

My advice for working on this rubric area is the same advice as for sentence structure: look over what you’ve written to double check for mistakes, and ask someone who’s good at writing to look over your practice essays and point out your errors. If you're really struggling with spelling, simply typing up your (handwritten) essay into a program like Microsoft Word and running spellcheck can alert you to problems. We've also got a great set of articles up on our blog about SAT Writing questions that may help you better understand any grammatical errors you are making.

How Do I Use The SAT Essay Grading Rubric?

Now that you understand the SAT essay rubric, how can you use it in your SAT prep? There are a couple of different ways.

Use The SAT Essay Rubric To...Shape Your Essays

Since you know what the SAT is looking for in an essay, you can now use that knowledge to guide what you write about in your essays!

A tale from my youth: when I was preparing to take the SAT for the first time, I did not really know what the essay was looking for, and assumed that since I was a good writer, I’d be fine.

Not true! The most important part of the SAT essay is using specific examples from the passage and explaining how they convince the reader of the author's point. By reading this article and realizing there's more to the essay than "being a strong writer," you’re already doing better than high school me.

Change the object in that girl’s left hand from a mirror to a textbook and you have a pretty good sketch of what my junior year of high school looked like.

Use The SAT Essay Rubric To...Grade Your Practice Essays

The SAT can’t exactly give you an answer key to the essay. Even when an example of an essay that scored a particular score is provided, that essay will probably use different examples than you did, make different arguments, maybe even argue different interpretations of the text...making it difficult to compare the two. The SAT essay rubric is the next best thing to an answer key for the essay - use it as a lens through which to view and assess your essay.

Of course, you don’t have the time to become an expert SAT essay grader - that’s not your job. You just have to apply the rubric as best as you can to your essays and work on fixing your weak areas . For the sentence structure, grammar, usage, and mechanics stuff I highly recommend asking a friend, teacher, or family member who is really good at (English) writing to take a look over your practice essays and point out the mistakes.

If you really want custom feedback on your practice essays from experienced essay graders, may I also suggest the PrepScholar test prep platform ? I manage the essay grading and so happen to know quite a bit about the essay part of this platform, which gives you both an essay grade and custom feedback for each essay you complete. Learn more about how it all works here .

What’s Next?

Are you so excited by this article that you want to read even more articles on the SAT essay? Of course you are. Don't worry, I’ve got you covered. Learn how to write an SAT essay step-by-step and read about the 6 types of SAT essay prompts .

Want to go even more in depth with the SAT essay? We have a complete list of past SAT essay prompts as well as tips and strategies for how to get a 12 on the SAT essay .

Still not satisfied? Maybe a five-day free trial of our very own PrepScholar test prep platform (which includes essay practice and feedback) is just what you need.

Trying to figure out whether the old or new SAT essay is better for you? Take a look at our article on the new SAT essay assignment to find out!

Want to improve your SAT score by 160 points?

Check out our best-in-class online SAT prep classes . We guarantee your money back if you don't improve your SAT score by 160 points or more.

Our classes are entirely online, and they're taught by SAT experts . If you liked this article, you'll love our classes. Along with expert-led classes, you'll get personalized homework with thousands of practice problems organized by individual skills so you learn most effectively. We'll also give you a step-by-step, custom program to follow so you'll never be confused about what to study next.

Try it risk-free today:

Laura graduated magna cum laude from Wellesley College with a BA in Music and Psychology, and earned a Master's degree in Composition from the Longy School of Music of Bard College. She scored 99 percentile scores on the SAT and GRE and loves advising students on how to excel in high school.

Student and Parent Forum

Our new student and parent forum, at ExpertHub.PrepScholar.com , allow you to interact with your peers and the PrepScholar staff. See how other students and parents are navigating high school, college, and the college admissions process. Ask questions; get answers.

Ask a Question Below

Have any questions about this article or other topics? Ask below and we'll reply!

Improve With Our Famous Guides

- For All Students

The 5 Strategies You Must Be Using to Improve 160+ SAT Points

How to Get a Perfect 1600, by a Perfect Scorer

Series: How to Get 800 on Each SAT Section:

Score 800 on SAT Math

Score 800 on SAT Reading

Score 800 on SAT Writing

Series: How to Get to 600 on Each SAT Section:

Score 600 on SAT Math

Score 600 on SAT Reading

Score 600 on SAT Writing

Free Complete Official SAT Practice Tests

What SAT Target Score Should You Be Aiming For?

15 Strategies to Improve Your SAT Essay

The 5 Strategies You Must Be Using to Improve 4+ ACT Points

How to Get a Perfect 36 ACT, by a Perfect Scorer

Series: How to Get 36 on Each ACT Section:

36 on ACT English

36 on ACT Math

36 on ACT Reading

36 on ACT Science

Series: How to Get to 24 on Each ACT Section:

24 on ACT English

24 on ACT Math

24 on ACT Reading

24 on ACT Science

What ACT target score should you be aiming for?

ACT Vocabulary You Must Know

ACT Writing: 15 Tips to Raise Your Essay Score

How to Get Into Harvard and the Ivy League

How to Get a Perfect 4.0 GPA

How to Write an Amazing College Essay

What Exactly Are Colleges Looking For?

Is the ACT easier than the SAT? A Comprehensive Guide

Should you retake your SAT or ACT?

When should you take the SAT or ACT?

Stay Informed

Get the latest articles and test prep tips!

Looking for Graduate School Test Prep?

Check out our top-rated graduate blogs here:

GRE Online Prep Blog

GMAT Online Prep Blog

TOEFL Online Prep Blog

Holly R. "I am absolutely overjoyed and cannot thank you enough for helping me!”

- Grades 6-12

- School Leaders

FREE Poetry Worksheet Bundle! Perfect for National Poetry Month.

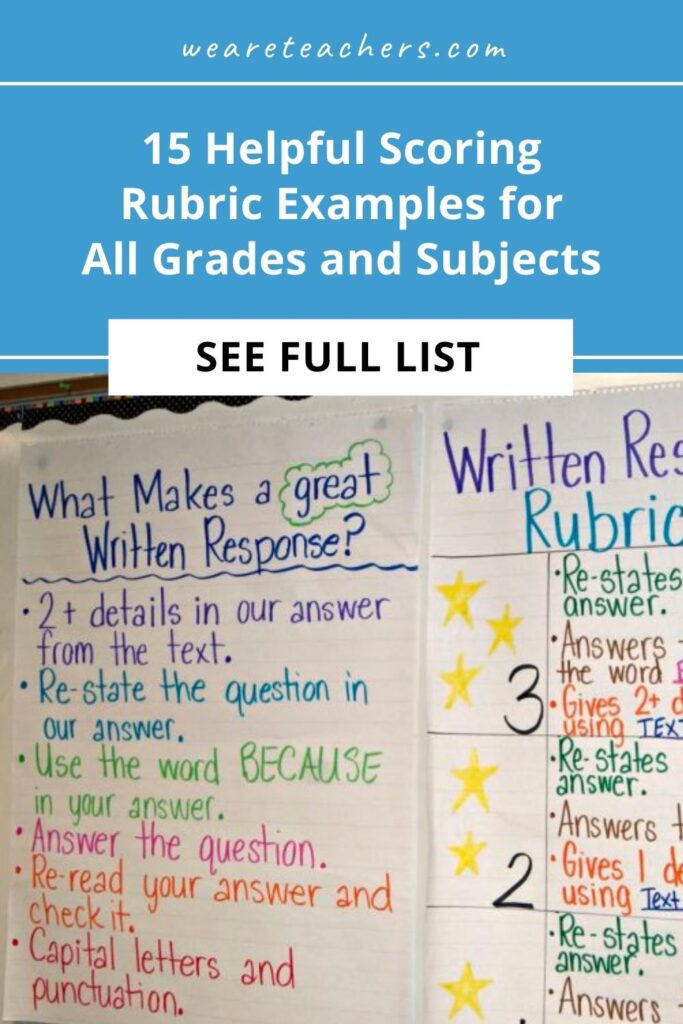

15 Helpful Scoring Rubric Examples for All Grades and Subjects

In the end, they actually make grading easier.

When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student’s knowledge, and the answers are either right or wrong. But often, assessing a student’s performance is much less clear-cut. In these situations, a scoring rubric is often the way to go, especially if you’re using standards-based grading . Here’s what you need to know about this useful tool, along with lots of rubric examples to get you started.

What is a scoring rubric?

In the United States, a rubric is a guide that lays out the performance expectations for an assignment. It helps students understand what’s required of them, and guides teachers through the evaluation process. (Note that in other countries, the term “rubric” may instead refer to the set of instructions at the beginning of an exam. To avoid confusion, some people use the term “scoring rubric” instead.)

A rubric generally has three parts:

- Performance criteria: These are the various aspects on which the assignment will be evaluated. They should align with the desired learning outcomes for the assignment.

- Rating scale: This could be a number system (often 1 to 4) or words like “exceeds expectations, meets expectations, below expectations,” etc.

- Indicators: These describe the qualities needed to earn a specific rating for each of the performance criteria. The level of detail may vary depending on the assignment and the purpose of the rubric itself.

Rubrics take more time to develop up front, but they help ensure more consistent assessment, especially when the skills being assessed are more subjective. A well-developed rubric can actually save teachers a lot of time when it comes to grading. What’s more, sharing your scoring rubric with students in advance often helps improve performance . This way, students have a clear picture of what’s expected of them and what they need to do to achieve a specific grade or performance rating.

Learn more about why and how to use a rubric here.

Types of Rubric

There are three basic rubric categories, each with its own purpose.

Holistic Rubric

Source: Cambrian College

This type of rubric combines all the scoring criteria in a single scale. They’re quick to create and use, but they have drawbacks. If a student’s work spans different levels, it can be difficult to decide which score to assign. They also make it harder to provide feedback on specific aspects.

Traditional letter grades are a type of holistic rubric. So are the popular “hamburger rubric” and “ cupcake rubric ” examples. Learn more about holistic rubrics here.

Analytic Rubric

Source: University of Nebraska

Analytic rubrics are much more complex and generally take a great deal more time up front to design. They include specific details of the expected learning outcomes, and descriptions of what criteria are required to meet various performance ratings in each. Each rating is assigned a point value, and the total number of points earned determines the overall grade for the assignment.

Though they’re more time-intensive to create, analytic rubrics actually save time while grading. Teachers can simply circle or highlight any relevant phrases in each rating, and add a comment or two if needed. They also help ensure consistency in grading, and make it much easier for students to understand what’s expected of them.

Learn more about analytic rubrics here.

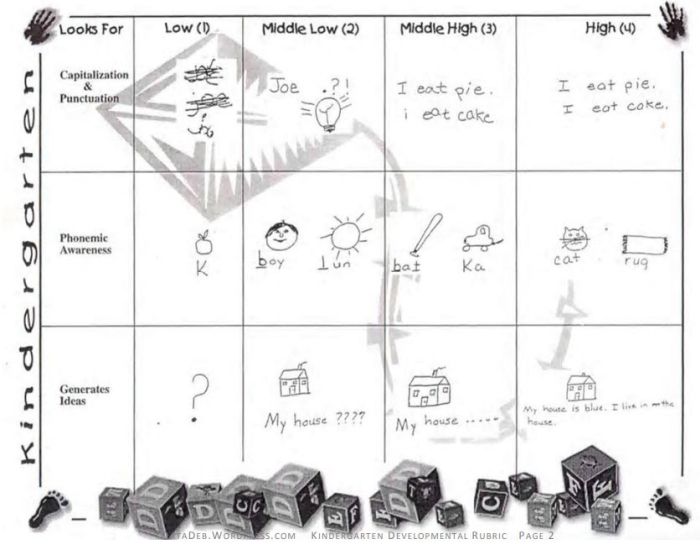

Developmental Rubric

Source: Deb’s Data Digest

A developmental rubric is a type of analytic rubric, but it’s used to assess progress along the way rather than determining a final score on an assignment. The details in these rubrics help students understand their achievements, as well as highlight the specific skills they still need to improve.

Developmental rubrics are essentially a subset of analytic rubrics. They leave off the point values, though, and focus instead on giving feedback using the criteria and indicators of performance.

Learn how to use developmental rubrics here.

Ready to create your own rubrics? Find general tips on designing rubrics here. Then, check out these examples across all grades and subjects to inspire you.

Elementary School Rubric Examples

These elementary school rubric examples come from real teachers who use them with their students. Adapt them to fit your needs and grade level.

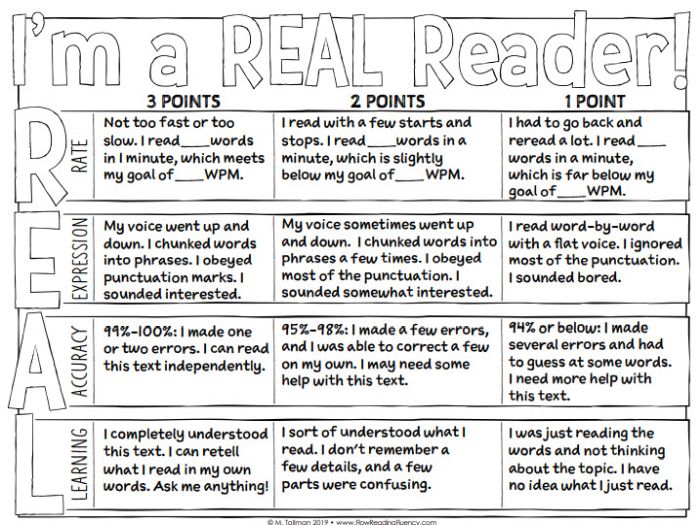

Reading Fluency Rubric

You can use this one as an analytic rubric by counting up points to earn a final score, or just to provide developmental feedback. There’s a second rubric page available specifically to assess prosody (reading with expression).

Learn more: Teacher Thrive

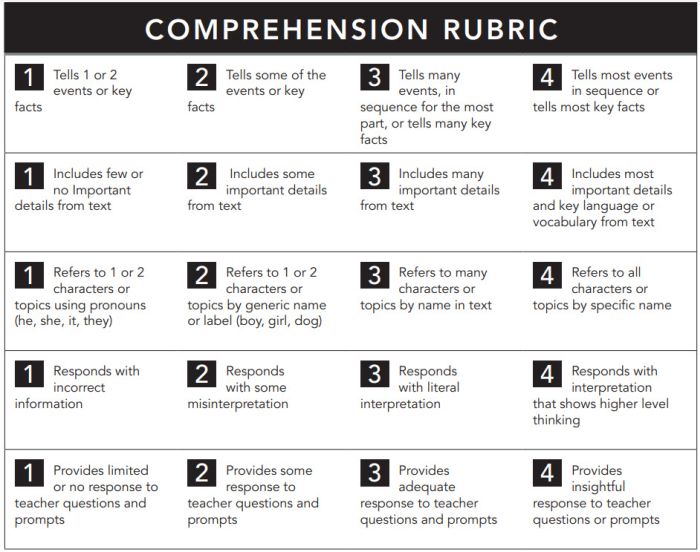

Reading Comprehension Rubric

The nice thing about this rubric is that you can use it at any grade level, for any text. If you like this style, you can get a reading fluency rubric here too.

Learn more: Pawprints Resource Center

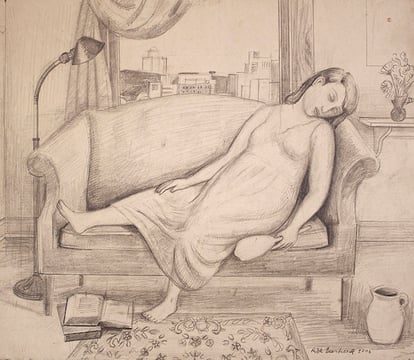

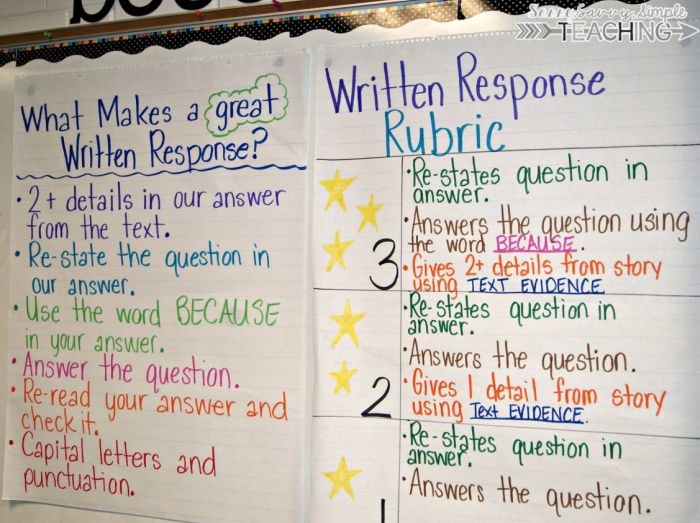

Written Response Rubric

Rubrics aren’t just for huge projects. They can also help kids work on very specific skills, like this one for improving written responses on assessments.

Learn more: Dianna Radcliffe: Teaching Upper Elementary and More

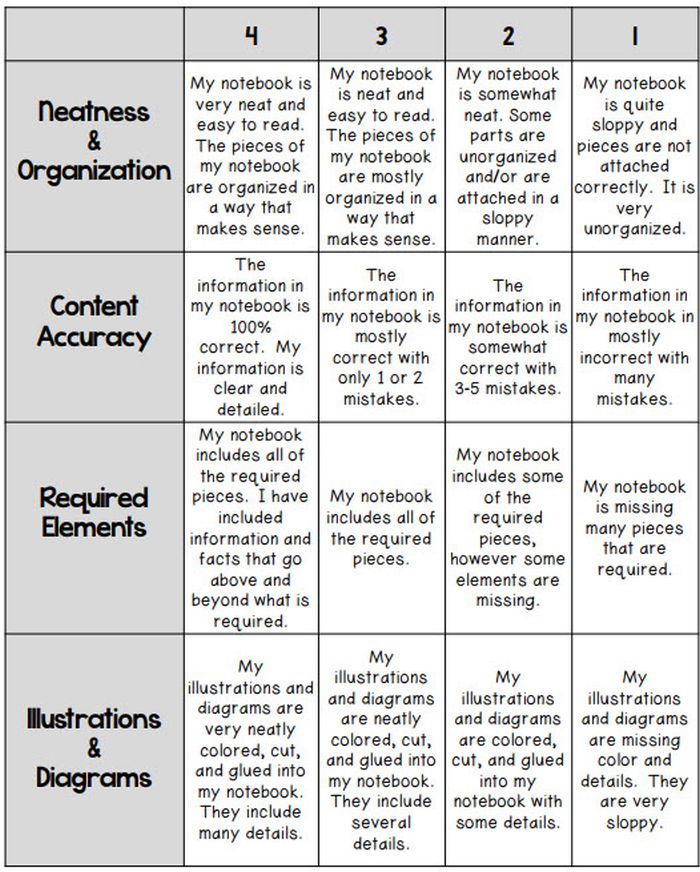

Interactive Notebook Rubric

If you use interactive notebooks as a learning tool , this rubric can help kids stay on track and meet your expectations.

Learn more: Classroom Nook

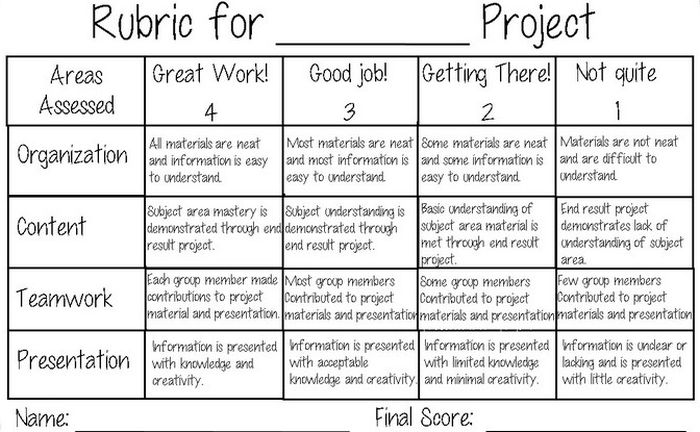

Project Rubric

Use this simple rubric as it is, or tweak it to include more specific indicators for the project you have in mind.

Learn more: Tales of a Title One Teacher

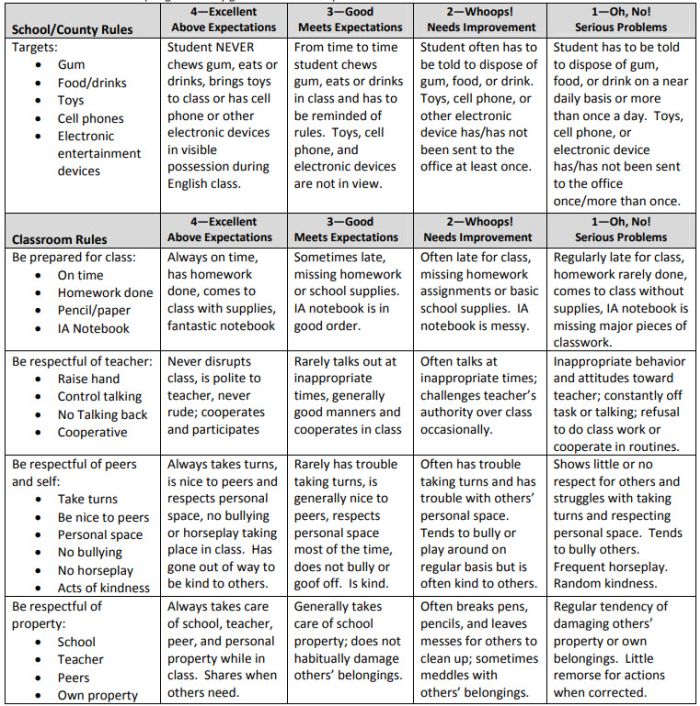

Behavior Rubric

Developmental rubrics are perfect for assessing behavior and helping students identify opportunities for improvement. Send these home regularly to keep parents in the loop.

Learn more: Teachers.net Gazette

Middle School Rubric Examples

In middle school, use rubrics to offer detailed feedback on projects, presentations, and more. Be sure to share them with students in advance, and encourage them to use them as they work so they’ll know if they’re meeting expectations.

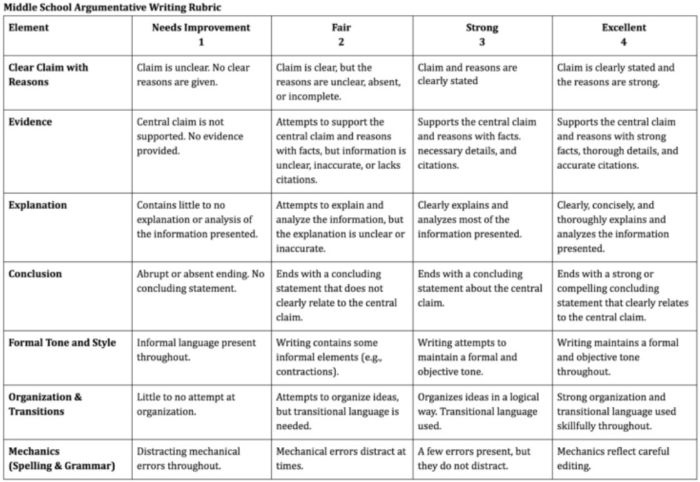

Argumentative Writing Rubric

Argumentative writing is a part of language arts, social studies, science, and more. That makes this rubric especially useful.

Learn more: Dr. Caitlyn Tucker

Role-Play Rubric

Role-plays can be really useful when teaching social and critical thinking skills, but it’s hard to assess them. Try a rubric like this one to evaluate and provide useful feedback.

Learn more: A Question of Influence

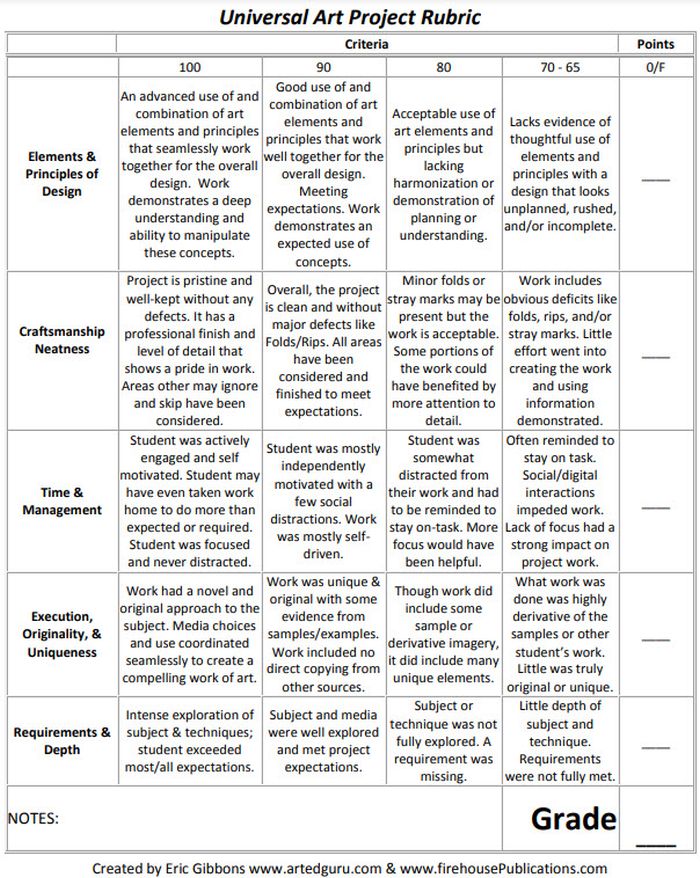

Art Project Rubric

Art is one of those subjects where grading can feel very subjective. Bring some objectivity to the process with a rubric like this.

Source: Art Ed Guru

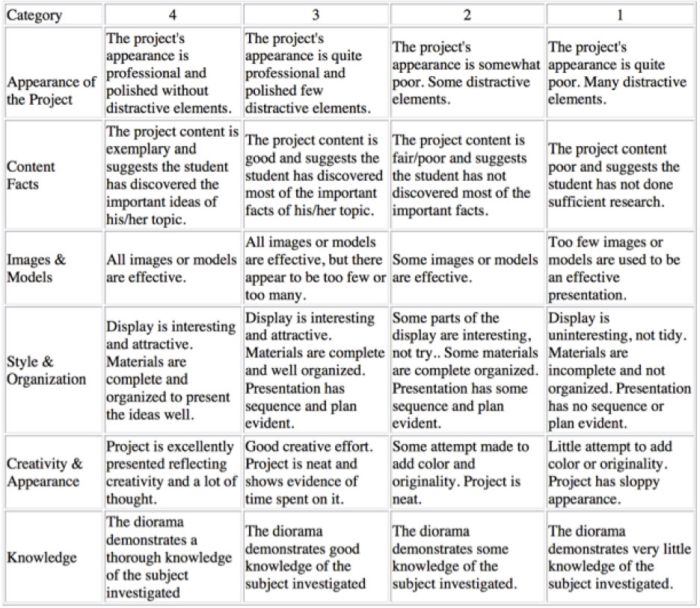

Diorama Project Rubric

You can use diorama projects in almost any subject, and they’re a great chance to encourage creativity. Simplify the grading process and help kids know how to make their projects shine with this scoring rubric.

Learn more: Historyourstory.com

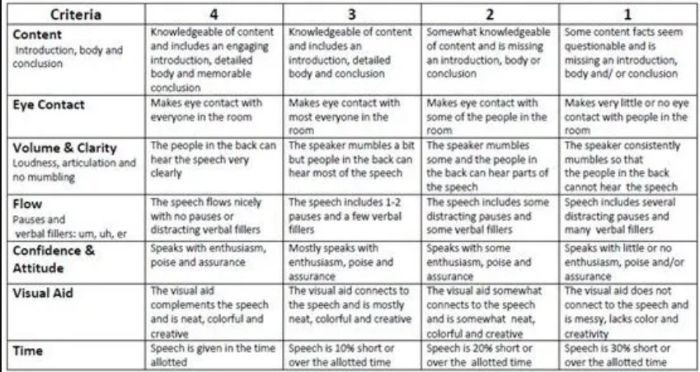

Oral Presentation Rubric

Rubrics are terrific for grading presentations, since you can include a variety of skills and other criteria. Consider letting students use a rubric like this to offer peer feedback too.

Learn more: Bright Hub Education

High School Rubric Examples

In high school, it’s important to include your grading rubrics when you give assignments like presentations, research projects, or essays. Kids who go on to college will definitely encounter rubrics, so helping them become familiar with them now will help in the future.

Presentation Rubric

Analyze a student’s presentation both for content and communication skills with a rubric like this one. If needed, create a separate one for content knowledge with even more criteria and indicators.

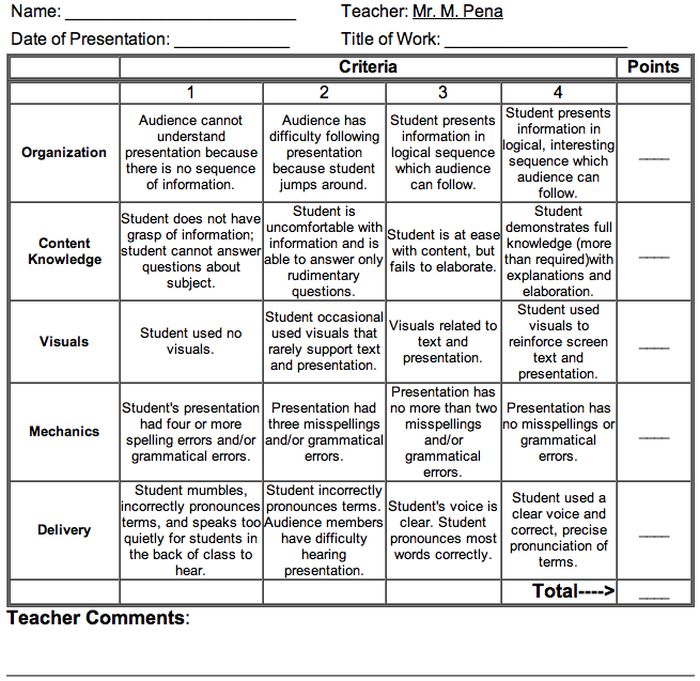

Learn more: Michael A. Pena Jr.

Debate Rubric

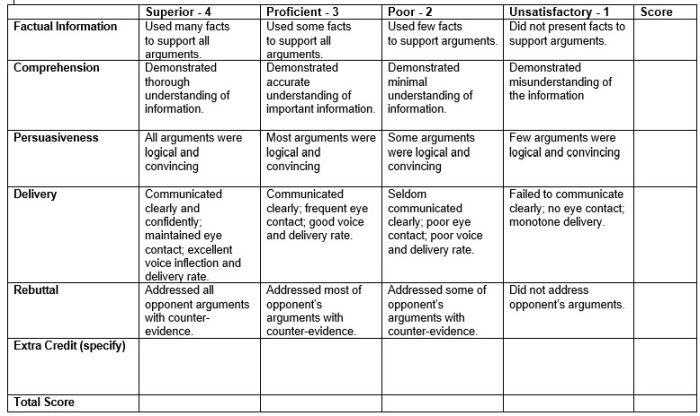

Debate is a valuable learning tool that encourages critical thinking and oral communication skills. This rubric can help you assess those skills objectively.

Learn more: Education World

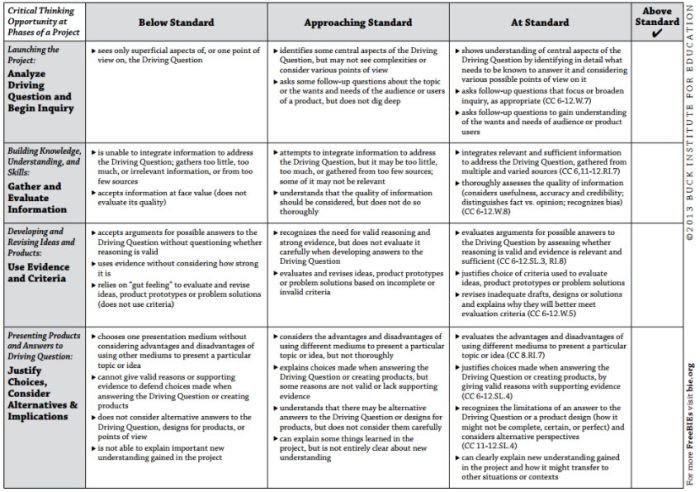

Project-Based Learning Rubric

Implementing project-based learning can be time-intensive, but the payoffs are worth it. Try this rubric to make student expectations clear and end-of-project assessment easier.

Learn more: Free Technology for Teachers

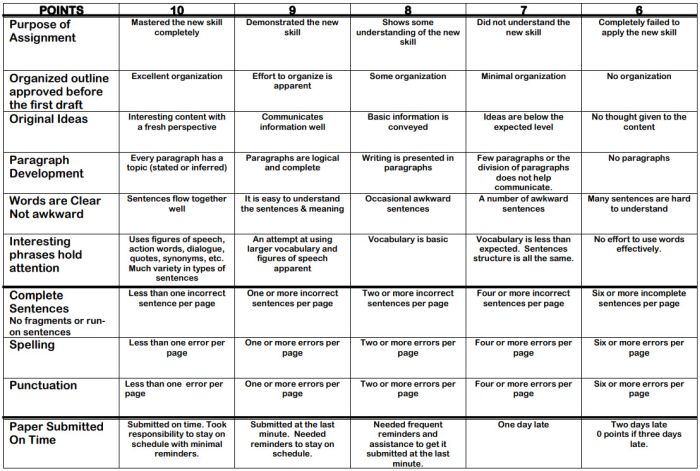

100-Point Essay Rubric

Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points.

Learn more: Learn for Your Life

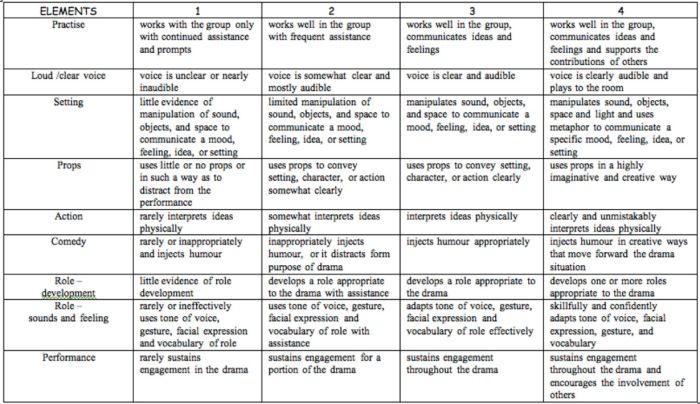

Drama Performance Rubric

If you’re unsure how to grade a student’s participation and performance in drama class, consider this example. It offers lots of objective criteria and indicators to evaluate.

Learn more: Chase March

How do you use rubrics in your classroom? Come share your thoughts and exchange ideas in the WeAreTeachers HELPLINE group on Facebook .

Plus, 25 of the best alternative assessment ideas ..

You Might Also Like

You’ve Heard of Attendance Questions. Now Meet Attendance Brackets!

Let the engagement begin! Continue Reading

Copyright © 2023. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Press ESC to close

Writing High-Scoring IELTS Essays: A Step-by-Step Guide

Writing great IELTS essays is essential for success. This guide will give you the tools to craft high-scoring essays. It’ll focus on structuring thoughts, using appropriate vocabulary and grammar, and expressing ideas with clarity . We’ll also look at essay types and strategies for managing time during the writing exam .

Practice is key . Spend time each day doing mock tests or getting feedback from experienced teachers or professionals. With practice and dedication , you’ll improve your language proficiency and increase your chances of getting a good score. Good luck!

Understanding the IELTS Essay Task

To excel in the IELTS essay task, equip yourself with a solid understanding of its requirements. Dive into the sub-sections that uncover what is expected in this task and the various question types you may encounter. Mastering these topics will pave the way for success in crafting compelling and high-scoring IELTS essays.

What is expected in the IELTS essay task

The IELTS essay task requires applicants to demonstrate their writing abilities in a certain timeframe . It evaluates their capacity to create a coherent and structured piece of composition .

A clear thesis is a must. It should be succinct, conveying the primary thought of the essay . Also, there should be a logical structure including an introduction, body paragraphs, and conclusion. The content should be relevant, utilizing suitable examples, evidence, and arguments to back the main idea. Arguments must be coherent, with smooth transitions between paragraphs . Plus, formal language, correct grammar, and accurate syntax must be used.

Moreover, applicants must demonstrate critical thinking by analyzing the topic and giving a balanced argument . Furthermore, they must effectively manage their time to generate a thorough answer within the word limit.

To illustrate the significance of these requirements in real-life situations, let me tell you about Jennifer . She was an aspiring nurse from Brazil taking the IELTS test . At first, she found it hard to handle the essay task. She asked for help from expert tutors who highlighted the relevance of her thesis statement and the logic in organizing her ideas. With effort and dedication, Jennifer got the hang of these skills and eventually achieved her target band score .

The types of questions asked in the IELTS essay task

The IELTS essay task covers multiple types of questions. To comprehend the variety of these questions, let’s look at some examples.

To do well, you need to prepare and practice for each type. Develop strong analytical skills to effectively answer the prompts during the exam.

Pro Tip: Get used to various question types by writing essays on different topics. This will help you adjust and boost your performance.

Descriptive questions

It’s essential to comprehend the IELTS Essay Task. This section focuses on descriptive questions . To illustrate this info effectively, use a table with suitable columns. Unique details enhance our understanding. To sharpen essay writing abilities, certain tips are useful. For instance, practice time management and create a clear structure . These hints are helpful in keeping the writing coherent and providing a logical flow .

Also Read: 10 Must-Follow IELTS Reading Tips and Tricks to Boost Your Band Score

Argumentative questions

Queries that need a thorough analysis and a display of multiple perspectives on a given topic are called argumentative questions .

They come in different types, such as:

- Cause and Effect (e.g. What are the consequences of using social media?)

- Pros and Cons (e.g. Should zoos be forbidden?)

- Agree or Disagree (e.g. Is homework essential for students?).

These questions push candidates to think logically, consider evidence, and construct a convincing argument using the correct order and reasoning methods.

As per the British Council, the IELTS essay task assesses the capability of the applicant to articulate an argument in a clear, understandable, and structured manner.

Advantages and disadvantages questions

Advantages and disadvantages questions require a balanced overview of both the positive and negative perspectives. Here is a summary of these questions:

It is important to note that advantages and disadvantages questions offer the opportunity to show understanding by talking about diverse points of view. Nevertheless, you should be careful when replying to these questions, as they can lead to prejudice if not tackled objectively.

Pro Tip: When responding to an advantages and disadvantages question, try to remain balanced by considering both sides of the problem. This will help you create an in-depth reply.

Problem and solution questions

Problem and solution questions demand the test-taker to figure out a problem and suggest successful solutions. Here are 6 tips to help you excel in this IELTS essay type:

- Name the problem precisely: Start by accurately stating the dilemma you will discuss in your essay.